Meta Newsroom release:

As a company that’s been at the cutting edge of AI development for more than a decade, it’s been hugely encouraging to witness the explosion of creativity from people using our new generative AI tools, like our Meta AI image generator which helps people create pictures with simple text prompts.

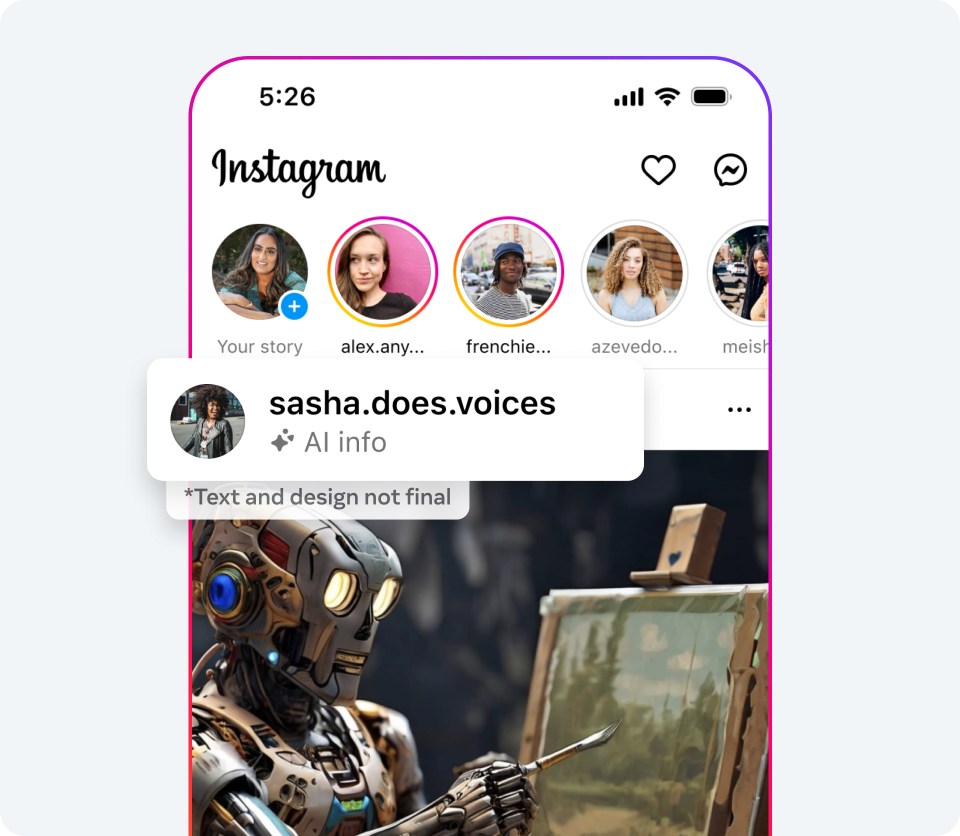

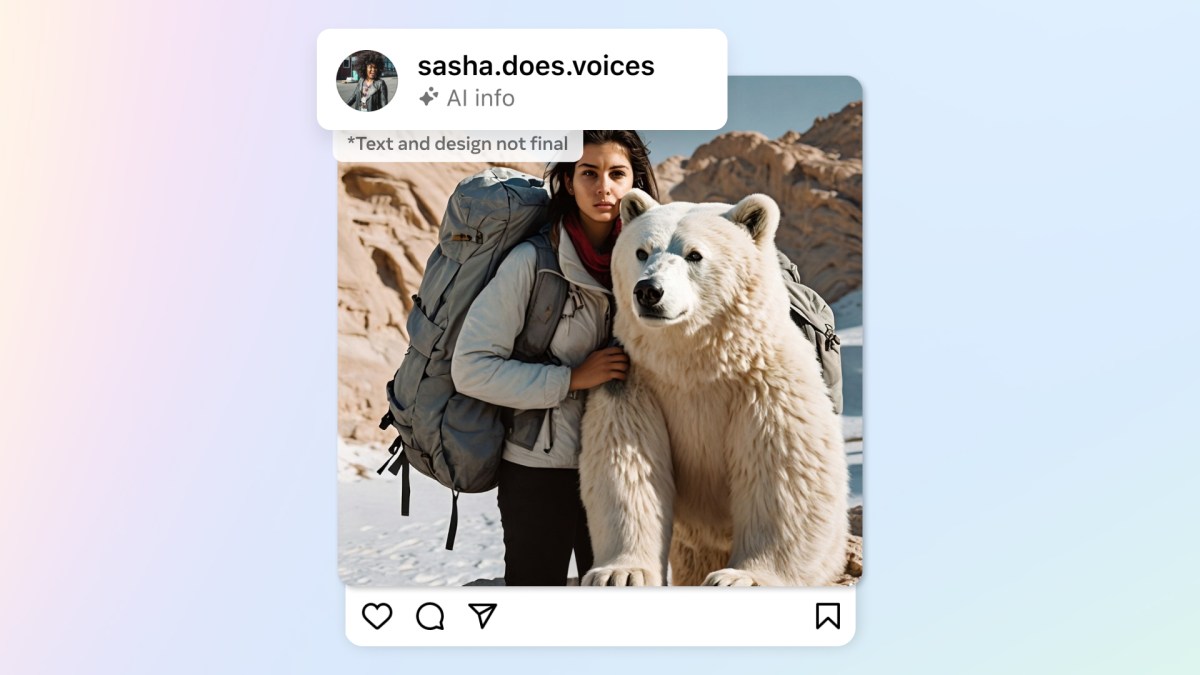

As the difference between human and synthetic content gets blurred, people want to know where the boundary lies. People are often coming across AI-generated content for the first time and our users have told us they appreciate transparency around this new technology. So it’s important that we help people know when photorealistic content they’re seeing has been created using AI. We do that by applying “Imagined with AI” labels to photorealistic images created using our Meta AI feature, but we want to be able to do this with content created with other companies’ tools too.

That’s why we’ve been working with industry partners to align on common technical standards that signal when a piece of content has been created using AI. Being able to detect these signals will make it possible for us to label AI-generated images that users post to Facebook, Instagram and Threads. We’re building this capability now, and in the coming months we’ll start applying labels in all languages supported by each app. We’re taking this approach through the next year, during which a number of important elections are taking place around the world. During this time, we expect to learn much more about how people are creating and sharing AI content, what sort of transparency people find most valuable, and how these technologies evolve. What we learn will inform industry best practices and our own approach going forward.

A New Approach to Identifying and Labeling AI-Generated Content

When photorealistic images are created using our Meta AI feature, we do several things to make sure people know AI is involved, including putting visible markers that you can see on the images, and both invisible watermarks and metadata embedded within image files. Using both invisible watermarking and metadata in this way improves both the robustness of these invisible markers and helps other platforms identify them. This is an important part of the responsible approach we’re taking to building generative AI features.Since AI-generated content appears across the internet, we’ve been working with other companies in our industry to develop common standards for identifying it through forums like the Partnership on AI (PAI). The invisible markers we use for Meta AI images – IPTC metadata and invisible watermarks – are in line with PAI’s best practices.

We’re building industry-leading tools that can identify invisible markers at scale – specifically, the “AI generated” information in the C2PA and IPTC technical standards – so we can label images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock as they implement their plans for adding metadata to images created by their tools.

While companies are starting to include signals in their image generators, they haven’t started including them in AI tools that generate audio and video at the same scale, so we can’t yet detect those signals and label this content from other companies. While the industry works towards this capability, we’re adding a feature for people to disclose when they share AI-generated video or audio so we can add a label to it. We’ll require people to use this disclosure and label tool when they post organic content with a photorealistic video or realistic-sounding audio that was digitally created or altered, and we may apply penalties if they fail to do so. If we determine that digitally created or altered image, video or audio content creates a particularly high risk of materially deceiving the public on a matter of importance, we may add a more prominent label if appropriate, so people have more information and context.

This approach represents the cutting edge of what’s technically possible right now. But it’s not yet possible to identify all AI-generated content, and there are ways that people can strip out invisible markers. So we’re pursuing a range of options. We’re working hard to develop classifiers that can help us to automatically detect AI-generated content, even if the content lacks invisible markers. At the same time, we’re looking for ways to make it more difficult to remove or alter invisible watermarks. For example, Meta’s AI Research lab FAIR recently shared research on an invisible watermarking technology we’re developing called Stable Signature. This integrates the watermarking mechanism directly into the image generation process for some types of image generators, which could be valuable for open source models so the watermarking can’t be disabled.

This work is especially important as this is likely to become an increasingly adversarial space in the years ahead. People and organizations that actively want to deceive people with AI-generated content will look for ways around safeguards that are put in place to detect it. Across our industry and society more generally, we’ll need to keep looking for ways to stay one step ahead.

In the meantime, it’s important people consider several things when determining if content has been created by AI, like checking whether the account sharing the content is trustworthy or looking for details that might look or sound unnatural.

These are early days for the spread of AI-generated content. As it becomes more common in the years ahead, there will be debates across society about what should and shouldn’t be done to identify both synthetic and non-synthetic content. Industry and regulators may move towards ways of authenticating content that hasn’t been created using AI as well content that has. What we’re setting out today are the steps we think are appropriate for content shared on our platforms right now. But we’ll continue to watch and learn, and we’ll keep our approach under review as we do. We’ll keep collaborating with our industry peers. And we’ll remain in a dialogue with governments and civil society.

AI Is Both a Sword and a Shield

Our Community Standards apply to all content posted on our platforms regardless of how it is created. When it comes to harmful content, the most important thing is that we are able to catch it and take action regardless of whether or not it has been generated using AI. And the use of AI in our integrity systems is a big part of what makes it possible for us to catch it.We’ve used AI systems to help protect our users for a number of years. For example, we use AI to help us detect and address hate speech and other content that violates our policies. This is a big part of the reason why we’ve been able to cut the prevalence of hate speech on Facebook to just 0.01-0.02% (as of Q3 2023). In other words, for every 10,000 content views, we estimate just one or two will contain hate speech.

While we use AI technology to help enforce our policies, our use of generative AI tools for this purpose has been limited. But we’re optimistic that generative AI could help us take down harmful content faster and more accurately. It could also be useful in enforcing our policies during moments of heightened risk, like elections. We’ve started testing Large Language Models (LLMs) by training them on our Community Standards to help determine whether a piece of content violates our policies. These initial tests suggest the LLMs can perform better than existing machine learning models. We’re also using LLMs to remove content from review queues in certain circumstances when we’re highly confident it doesn’t violate our policies. This frees up capacity for our reviewers to focus on content that’s more likely to break our rules.

AI-generated content is also eligible to be fact-checked by our independent fact-checking partners and we label debunked content so people have accurate information when they encounter similar content across the internet.

Meta has been a pioneer in AI development for more than a decade. We know that progress and responsibility can and must go hand in hand. Generative AI tools offer huge opportunities, and we believe that it is both possible and necessary for these technologies to be developed in a transparent and accountable way. That’s why we want to help people know when photorealistic images have been created using AI, and why we are being open about the limits of what’s possible too. We’ll continue to learn from how people use our tools in order to improve them. And we’ll continue to work collaboratively with others through forums like PAI to develop common standards and guardrails.

Source:

Labeling AI-Generated Images on Facebook, Instagram and Threads

Nick Clegg offers a new approach to identifying and labeling AI-generated content.