josh7777777

New member

- Local time

- 10:01 AM

- Posts

- 5

- OS

- Windows 11

I've been trying to set up some virtualization, and originally my understanding was that VHDX just offered a slight performance hit.

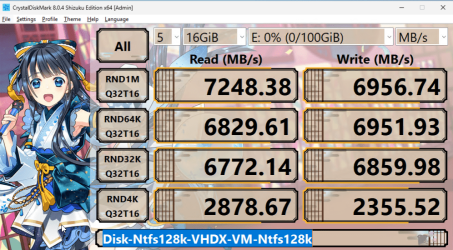

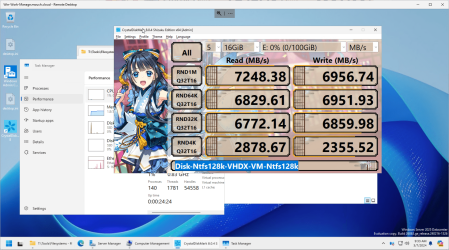

However, once I got to actually testing them, it's actually more like a 50% or greater hit to performance! And every time I add a virtualization layer (e.g. a VHDX inside a hyper-v guest on a VHDX), it cuts the performance by another 50%.

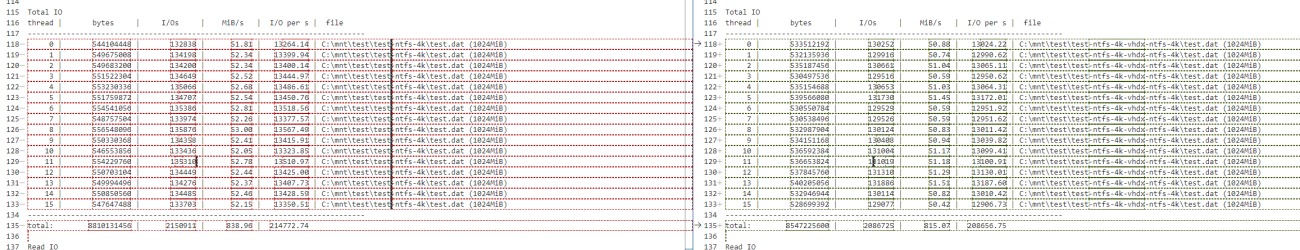

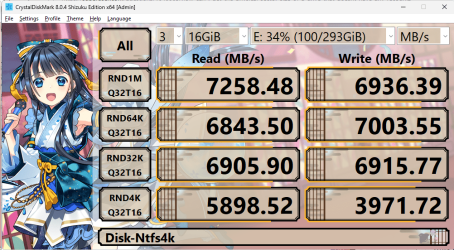

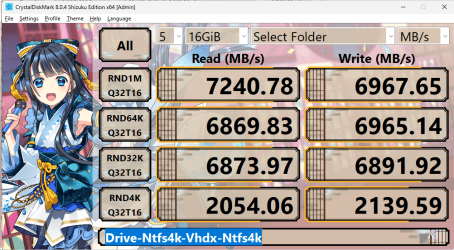

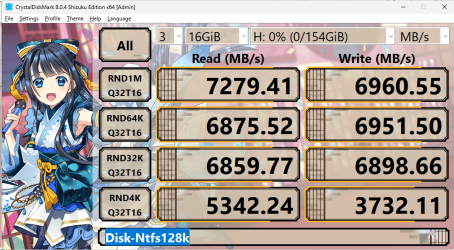

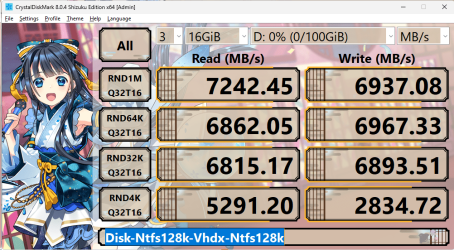

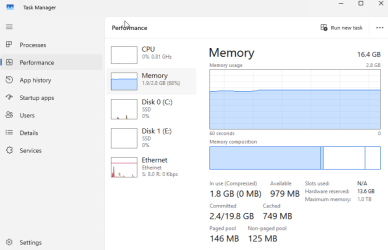

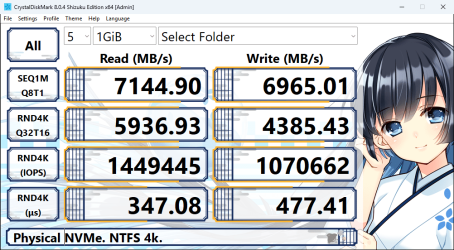

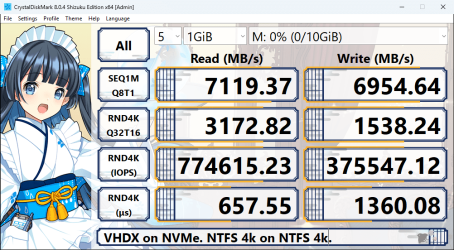

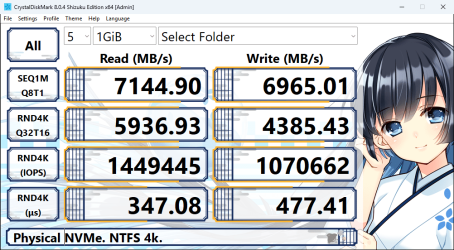

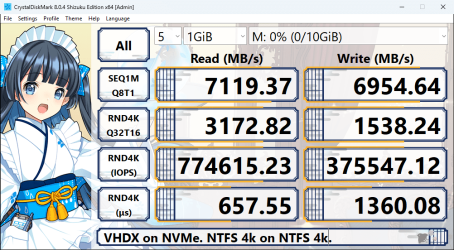

I've done quite a bit of reading, and I can't see any obvious reasons why the performance should be so bad. Here are some screenshots of my tests on the bare NVMe drive versus the mounted VHDX (which is on the same drive). I'm running the tests one at a time, so they're not affecting each other.

Regardless of what I do, the first test (sequential read/write) is almost always the same. However, then next three tests (random read/write) are about 2x worse.

I'm wondering if the reading I've done so far hasn't considered how damn fast these NVMe drives are, and VHDX just can't keep up.

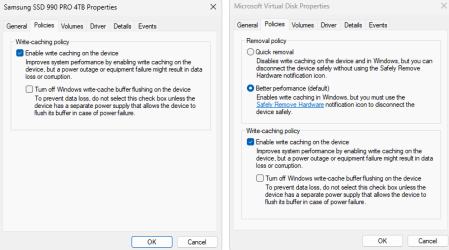

These are Samsung 4TB 990 Pro drives.

I've tried a lot of different combinations, and the results are all about the same. REFs instead of NTFS, eFAT, 64k instead of 4k, striped Storage Spaces, parity, mirrored. The first (sequential IO) test is usually pretty close, and the next three (random IO) are 50% or worse.

However, once I got to actually testing them, it's actually more like a 50% or greater hit to performance! And every time I add a virtualization layer (e.g. a VHDX inside a hyper-v guest on a VHDX), it cuts the performance by another 50%.

I've done quite a bit of reading, and I can't see any obvious reasons why the performance should be so bad. Here are some screenshots of my tests on the bare NVMe drive versus the mounted VHDX (which is on the same drive). I'm running the tests one at a time, so they're not affecting each other.

Regardless of what I do, the first test (sequential read/write) is almost always the same. However, then next three tests (random read/write) are about 2x worse.

I'm wondering if the reading I've done so far hasn't considered how damn fast these NVMe drives are, and VHDX just can't keep up.

These are Samsung 4TB 990 Pro drives.

I've tried a lot of different combinations, and the results are all about the same. REFs instead of NTFS, eFAT, 64k instead of 4k, striped Storage Spaces, parity, mirrored. The first (sequential IO) test is usually pretty close, and the next three (random IO) are 50% or worse.

- Windows Build/Version

- Windows Server Datacenter 2022

My Computer

System One

-

- OS

- Windows 11

- Computer type

- PC/Desktop